News 2019

December 2019:

Study finds BPA levels in humans dramatically underestimated

“This study raises serious concerns about whether we’ve been careful enough about the safety of this chemical,” said Patricia Hunt, Washington State University professor and corresponding author on the paper.

“What it comes down to is that the conclusions federal agencies have come to about how to regulate BPA may have been based on inaccurate measurements.”

BPA can be found in a wide range of plastics, including food and drink containers, and animal studies have shown that it can interfere with the body’s hormones. In particular, fetal exposure to BPA has been linked to problems with growth, metabolism, behavior, fertility and even greater cancer risk.

Despite this experimental evidence, the FDA has evaluated data from studies measuring BPA in human urine and determined that human exposure to the chemical is at very low, and therefore, safe levels. This paper challenges that assumption and raises questions about other chemicals, including BPA replacements, that are also assessed using indirect methods.

Hunt’s colleague, Roy Gerona, assistant professor at University of California, San Francisco, developed a direct way of measuring BPA that more accurately accounts for BPA metabolites, the compounds that are created as the chemical passes through the human body.

Previously, most studies had to rely on an indirect process to measure BPA metabolites, using an enzyme solution made from a snail to transform the metabolites back into whole BPA, which could then be measured.

Gerona’s new method is able to directly measure the BPA metabolites themselves without using the enzyme solution.

In this study, a research team comprised of Gerona, Hunt and Fredrick vom Saal of University of Missouri compared the two methods, first with synthetic urine spiked with BPA and then with 39 human samples. They found much higher levels of BPA using the direct method, as much as 44 times the mean reported by the National Health and Nutrition Examination Survey (NHANES). The disparity between the two methods increased with more BPA exposure: the greater the exposure the more the previous method missed.

Gerona, the first author on the paper, said more replication is needed.

“I hope this study will bring attention to the methodology used to measure BPA, and that other experts and labs will take a closer look and assess independently what is happening,” he said.

The research team is conducting further experiments into BPA measurement as well as other chemicals that may also have been measured in this manner, a category that includes environmental phenols such as parabens, benzophenone, triclosan found in some cosmetics and soaps, and phthalates found in many consumer products including toys, food packaging and personal care products.

“BPA is still being measured indirectly through NHANES, and it’s not the only endocrine-disrupting chemical being measured this way,” Gerona said. “Our hypothesis now is that if this is true for BPA, it could be true for all the other chemicals that are measured indirectly.” Neuroscience Journal

“Having access to clean air is kind of a basic human right.”

“Outdoor particulate pollution was responsible for an estimated 4.2 million deaths worldwide in 2015, with a majority concentrated in east and south Asia. Millions more fell ill from breathing dirty air.

This fine pollution mainly comes from burning things: Coal in power plants, gasoline in cars, chemicals in industrial processes, or woody materials and whatever else ignites during wildfires. The particles are too small for the eye to see — each about 35 times smaller than a grain of fine beach sand- but in high concentrations they cast a haze in the sky. And, when breathed in, they wreak havoc on human health.

PM2.5 can evade our bodies’ defenses, penetrating deep into the lungs and even entering the bloodstream. It has been shown to exacerbate asthma and other lung disorders, and increase the risk of heart attack and stroke. This microscopic pollution, named because each particle is smaller than 2.5 micrometers across, has also been linked to developmental problems in children and cognitive impairment in the elderly, as well as premature labor and low birth weights.

Under high levels of particulate pollution, “you can’t function, you can’t thrive,” said Alexandra Karambelas, an environmental analyst and research scientist affiliated with Columbia University. “Having access to clean air is kind of a basic human right.”

Developing and newly industrialized regions experience some of the worst particulate pollution today. But even high-income, developed economies, which have made big strides in reducing such pollution, continue to struggle with the quality of their air.

In the United States, which has some of the cleanest air in the world, fine particulate matter still contributed to 88,000 premature deaths in 2015 — making this pollution more deadly than both diabetes and the flu. And pollution in America has worsened since 2016, reversing years of decline.

Before governments can decide how best to tackle unhealthy air, Dr. Karambelas said, they need to better understand the causes of pollution. “Is it lax standards? No enforcement of the standards?” she asked. “Is something happening regionally that plays a large role?” she asked.

The city-level data shown here focuses on average particulate pollution, allowing you to compare air quality across the world. But the amount of pollution you breathe also varies within a city, from neighborhood to neighborhood and block to block.

And pollution does not affect all groups equally.

Last year’s deadly Camp Fire engulfed Paradise, California, in the Sierra Nevada foothills, causing 85 deaths and destroying nearly 19,000 buildings. Smoke from the fire blanketed much of northern California for nearly two weeks, prompting health warnings.

In San Francisco, nearly 200 miles south of Paradise, fine particulate pollution reached nearly 200 micrograms per cubic meter at the worst hour, according to Berkeley Earth, a nonprofit research group that aggregates data from air-quality monitoring sites. Average daily air quality hovered between “unhealthy” and “very unhealthy” for 11 days. Schools were closed and cable car service suspended; protective face masks and air filters sold out at local stores.” NY Times

You’re Tracked Everywhere You Go Online. Use This Guide to Fight Back.

You can’t stop all of it, but you don’t have to give up.

“I learned when I recently did an online privacy checkup: Google was sharing my creditworthiness with third parties. If you want Target to stop sharing your information with marketers, you have to call them. And, my favorite: If you would like Hearst, the publishing giant, to stop sharing your physical mailing address with third parties, you have to mail a physical letter with your request to the company’s lawyers.

Cool cool cool. My colleague wrote this month about the company Sift, which collects your consumer data and gives you a secret consumer score.

“As consumers, we all have ‘secret scores’: hidden ratings that determine how long each of us waits on hold when calling a business, whether we can return items at a store, and what type of service we receive,” Ms. Hill wrote. “A low score sends you to the back of the queue; high scores get you elite treatment.” (If you’re interested, you can request your own secret dossier by emailing them. Though the company is backed up because of the “recent press coverage.” It took them two weeks to respond to my request.)

Like many people, I was a little stunned at the intimate level of data that was being collected. Ms. Hill was, too.

“I know that we are tracked in surprising ways, and have reported on those surprising ways extensively, but even I was shocked to get a 400-page file on myself back from a company I’d never heard of,” she told me. “It was bizarre to see what I had ordered from a restaurant three years ago in the report and disturbing to find all the private Airbnb messages that I had sent to hosts. I didn’t think any company beyond Airbnb would have that data.”

It’s no secret that we’re being tracked everywhere online. We all know this; every one of us has a story about an alarmingly specific ad appearing on Facebook, or a directly targeted Amazon promo following us around the internet. But as internet-connect devices become more prevalent in our everyday lives — think smart TVs, smart speakers and smart refrigerators, for example — and as our reliance on smartphones increases, we’re just creating so much more data than we used to, said Bennett Cyphers, a staff technologist at the Electronic Frontier Foundation, a nonprofit digital rights organization that advocates for consumer online privacy.

“There are just more streams of data out there to be aggregated and tied to profiles and sold,” Mr. Cyphers said. “Because people don’t realize that their car is collecting data about their location and sending it off to some server somewhere, they’re less likely to think about that, and companies are less likely to be held accountable for that kind of thing.”

He added: “Information is being shared completely haphazardly, and there’s no accountability at any stage, especially in America.”

“The only people I’ve heard say, ‘Who cares?’ are people who don’t understand the scope of the problem,” Mr. Cyphers said.

“A lot of the tracking systems out there make it easier for law enforcement to gather data without warrants,” he said. “A lot of trackers sell data directly to law enforcement and to Immigrations and Customs Enforcement. I think the bottom line is that it’s creepy at best. It enables manipulative advertising and political messaging in ways that make it a lot easier for the messengers to be unaccountable. It enables discriminatory advertising without a lot of accountability, and in the worst cases it can put real people in real danger.”

Still, there are signs that things could be improving, if slowly. The Cambridge Analytica scandal Cyphers said, “dredged up the worst parts of the industry into the press and popular knowledge,” which in some ways forced companies and lawmakers to acknowledge the issue. Sweeping changes, such as the California Consumer Privacy Act and Europe GDPR have led the way in giving internet users new rights and protections, and Mr. Cyphers said that “popular awareness and the techlash has opened up room for real regulation.”

But we’re a long way from a privacy utopia.

“As long as you can make a buck and what you’re doing isn’t illegal,” Mr. Cyphers said “someone’s going to do it.”

What can I do?

First, be more cautious of the information you voluntary hand over.

“Don’t hand over data unless you have to!” Ms. Hill said. “If a store asks for your email address or ZIP code, say no. When Facebook asks you to upload your contact book, don’t do it. If you’re buying some sensitive product (prenatal vitamins, medication), don’t use your store loyalty card and use cash.”

Added Mr. Cyphers: “Think hard before you enter your email into a form online about why the company actually needs your email and what they might do with it. You can lie. It’s not illegal to put a fake email, or a fake phone number or a fake name in the vast majority of services you sign up for,” he said. “There’s no reason they need it, there’s no reason you have to give it to them.”

Phew! It’s a lot, I know, and unfortunately we’re only scratching the surface; protecting your privacy is a never-ending process that requires constant vigilance. But each of these steps is worth the time investment, and perhaps the most important thing to keep in mind: Don’t let yourself be lulled into a false sense of security.

“I wish I could say it has changed my behavior,” Ms. Hill said when I asked her if reporting her story on Sift has changed her online behavior, “but what’s become clearer and clearer to me in reporting on privacy over the last decade is that you can’t completely stop the data collection (unless you go live in a dark cave sans power).”

She added: “But at the end of the day, there is little we can do as individuals; there’s really a need for change on a more systemic level to give us more control over our data.” NY Times

November 2019:

Bacteria in the gut may alter aging process

“All living organisms, including human beings, coexist with a myriad of microbial species living in and on them, and research conducted over the last 20 years has established their important role in nutrition, physiology, metabolism and behaviour.

Using mice, the team led by Professor Sven Pettersson transplanted gut microbes from old mice (24 months old) into young, germ-free mice (6 weeks old). After eight weeks, the young mice had increased intestinal growth and production of neurons in the brain, known as neurogenesis.

The team showed that the increased neurogenesis was due to an enrichment of gut microbes that produce a specific short chain fatty acid, called butyrate.

Butyrate is produced through microbial fermentation of dietary fibres in the lower intestinal tract and stimulates production of a pro-longevity hormone called FGF21, which plays an important role in regulating the body’s energy and metabolism. As we age, butyrate production is reduced.

The researchers then showed that giving butyrate on its own to the young germ-free mice had the same adult neurogenesis effects.

The study was published in Science Translational Medicine.

“We’ve found that microbes collected from an old mouse have the capacity to support neural growth in a younger mouse,” said Prof Pettersson. “This is a surprising and very interesting observation, especially since we can mimic the neuro-stimulatory effect by using butyrate alone.”

“These results will lead us to explore whether butyrate might support repair and rebuilding in situations like stroke, spinal damage and to attenuate accelerated aging and cognitive decline”.

How gut microbes impact the digestive system

The team also explored the effects of gut microbe transplants from old to young mice on the functions of the digestive system.

With age, the viability of small intestinal cells is reduced, and this is associated with reduced mucus production that make intestinal cells more vulnerable to damage and cell death.

However, the addition of butyrate helps to better regulate the intestinal barrier function and reduce the risk of inflammation.

The team found that mice receiving microbes from the old donor gained increases in length and width of the intestinal villi – the wall of the small intestine. In addition, both the small intestine and colon were longer in the old mice than the young germ-free mice.

The discovery shows that gut microbes can compensate and support an ageing body through positive stimulation.

This points to a new potential method for tackling the negative effects of ageing by imitating the enrichment and activation of butyrate.

“We can conceive of future human studies where we would test the ability of food products with butyrate to support healthy ageing and adult neurogenesis,” said Prof Pettersson.” Neuroscience Journal

Dark History of Yoga Revealed

Source: New York Times

“Yoga students and teachers are finally grappling with unwanted touch and abuse of power — and the darker history of yoga.

“Rachel Brathen had no idea of the deluge headed her way when she asked her Instagram followers if they ever had experienced touch that felt inappropriate in yoga.

This was nearly two years ago. Ms. Brathen, 31 and a yoga studio owner in Aruba, heard from hundreds.

The letters described a constellation of abuses of power and influence, including being propositioned after class and on yoga retreats, forcibly kissed during private meditation sessions and assaulted on post-yoga massage tables.

The complaints also included being touched in ways that felt improper during yoga classes — essentially right in public.

Other professionals whose work can involve touching people, such as massage therapists, are regulated by the government. Yoga teachers are not, and there are no industry trade groups that police these issues.

About five months later, in April 2018, nine women went public in a magazine article about their treatment at the hands of one of yoga’s most important, influential and revered gurus.

Again, very little happened.

Disregarding complaints about unwanted touch, or much worse, has been the way of yoga for decades. Much of the yoga community has been slow or unwilling to respond, maybe because teachers are loath to discredit those they see as gurus. Additionally, many teachers have built their businesses and personal brands in part from associating with these figures.

If you have taken classes called vinyasa, power yoga or flow yoga, you have practiced a version of Ashtanga yoga. Ashtanga was popularized and named by a man named Krishna Pattabhi Jois who died in 2009, when he was 93 years old.

But in many cases, Mr. Jois’s adjustments were not about yoga, some former students say. “He would get on top of me, make sure that his genitals were placed directly above my genitals, and he pushed my leg down to the floor and he would hump me,” said Karen Rain, now 53. “He would grind his genitals into my genitals.”

Because of the power and devotion Mr. Jois commanded, because these adjustments were meted out in public — which somehow normalized them — and because of the role that “letting go” plays in yoga, it took years, in some cases, for the women to make sense of their experiences.

“I tried to frame it that he was just adjusting me and that I was supposed to surrender to the asana” — a word that comes from Sanskrit and refers to yoga poses and movements — “and that there was some reason he was doing it that maybe I didn’t understand yet, that if I kept doing it, it would make sense someday, maybe,” Ms. Rain said.

Jubilee Cooke, now 54, studied with Mr. Jois in 1997. He groped her on a daily basis, she said.

“Pattabhi Jois came up from behind me while I was in full lotus position and he grabbed my crotch, he grabbed my genitals and swung me back, lifted me back so that I would land in a yoga push-up,” Ms. Cooke said.

He would lay on top of her, and grind into her, as he did with Ms. Rain. Sometimes he would stand behind her while she was in a forward fold. She could see him simulating sexual motions in the air, thrusting his pelvis at her.

She had traveled from Seattle to practice in India, planning to stay for three months. She stayed the duration, returning daily to his studio. “I was just caught up in the culture and what everyone else was doing,” she said.

Ms. Rain and eight other women went public in 2018 in an article in a Canadian publication called The Walrus written by Matthew Remski, a yoga teacher. It described their experiences of being groped, kissed, even fingered through yoga tights.

During Mr. Jois’s lifetime, some people did try to intervene. Farley Harding, now 58, was in India studying with Mr. Jois in 1995.

After becoming disgusted by what he saw Mr. Jois do to women students — “grabbing their asses and kissing them,” Mr. Harding said — he privately confronted Mr. Jois. “I said, ‘You are a teacher and we are students, and what you’re doing to students is wrong.’”

Mr. Harding said that Mr. Jois acted like he didn’t understand, which Mr. Harding didn’t believe. He stopping studying with Mr. Jois.

Another person who spoke up is Micki Evslin. In 2002, when she was 54, she went to a workshop Mr. Jois led in Hawaii.

At one point, he instructed the 150 or so students to take a forward bend. “I could kind of see behind me,” she said, “his little feet coming up. And, I thought, ‘Oh! Pattabhi Jois is going to correct me.’ And he put his fingers under my coccyx bone and kind of used it as a lever to yank me up.”

It surprised her, but it didn’t offend her. A few minutes later, when Mr. Jois called for the class to do a wide-leg forward bend, she saw his feet approach again. “My head’s on the floor. My feet are far apart,” she said. “And, this time, he jammed his two fingers into my vagina, basically forcefully, because he had to go through tights and underpants.”

She froze. “You don’t want to create a disturbance,” Ms. Evslin said. “You’re not sure what to do. And, you’re processing everything. Like, ‘What do I say? How do I handle this?’”

The #MeToo movement may have helped to provide clarity about certain interactions between boss and employee, clergy and believer, doctor and patient.

Yoga teacher laying genital to genital on a student can now be added.

Stark stories of harassment and abuse have also revealed how complicated it can be to navigate more ambiguous situations. I interviewed more than 50 yoga practitioners, teachers and studio owners about touch in yoga. What I came to realize is that there may be no grayer gray zone than a yoga studio, where physical intimacy, spirituality and power dynamics come together in a sweaty little room.

Kest’s teacher training curriculum was acquired in 2011 by Life Time Inc., a chain of athletic and fitness centers with nearly 150 locations around the country. Mr. Kest is the chain’s “teacher of teachers”. This year alone he has overseen the training of 800 yogis. By the end of the year, the program will have brought in $2.4 million to Life Time.

During the class, she said, Mr. Kest talked about intimacy and passion. While Ms. Donnell was in one pose, on her back with an ankle over a knee in a figure four, Mr. Kest approached her and took hold of her extended foot.

Facing her, he wedged her foot into his groin. He then leaned toward her and put his open palms on her chest, touching part of her breast.

In the final resting pose, he came by again. “He placed his hand again on my breast, then he moved his hand down to my lower pelvis,” Ms. Donnell said.

She didn’t know what to make of the experience. She wondered if she was being uptight.

Five years later, she feels more clarity: “If he had walked around and said to me, ‘Is it O.K. if I wedge your foot in my pelvis near my crotch, near my private part?’ I would have said, ‘No, that’s not O.K.’”

NY Times

Daily exposure to blue light may accelerate aging, even if it doesn’t reach your eyes

“Light is necessary for life, but prolonged exposure to artificial light is a matter of increasing health concern. Humans are exposed to increased amounts of light in the blue spectrum produced by light-emitting diodes (LEDs), which can interfere with normal sleep cycles. The LED technologies are relatively new; therefore, the long-term effects of exposure to blue light across the lifespan are not understood. We investigated the effects of light in the model organism, Drosophila melanogaster, and determined that flies maintained in daily cycles of 12-h blue LED and 12-h darkness had significantly reduced longevity compared with flies maintained in constant darkness or in white light with blue wavelengths blocked. Exposure of adult flies to12 h of blue light per day accelerated aging phenotypes causing damage to retinal cells, brain neurodegeneration, and impaired locomotion. We report that brain damage and locomotor impairments do not depend on the degeneration in the retina, as these phenotypes were evident under blue light in flies with genetically ablated eyes. Blue light induces expression of stress-responsive genes in old flies but not in young, suggesting that cumulative light exposure acts as a stressor during aging. We also determined that several known blue-light-sensitive proteins are not acting in pathways mediating detrimental light effects. Our study reveals the unexpected effects of blue light on fly brain and establishes Drosophila as a model in which to investigate long-term effects of blue light at the cellular and organismal level.”

“Prolonged exposure to blue light, such as that which emanates from your phone, computer, and household fixtures, could be affecting your longevity, even if it’s not shining in your eyes.

New research at Oregon State University suggests that the blue wavelengths produced by light-emitting diodes damage cells in the brain as well as retinas.

The study, published today in Aging and Mechanisms of Disease, involved a widely used organism, Drosophila melanogaster, the common fruit fly, an important model organism because of the cellular and developmental mechanisms it shares with other animals and humans.

Jaga Giebultowicz, a researcher in the OSU College of Science who studies biological clocks, led a research collaboration that examined how flies responded to daily 12-hour exposures to blue LED light – similar to the prevalent blue wavelength in devices like phones and tablets – and found that the light accelerated aging.

Flies subjected to daily cycles of 12 hours in light and 12 hours in darkness had shorter lives compared to flies kept in total darkness or those kept in light with the blue wavelengths filtered out. The flies exposed to blue light showed damage to their retinal cells and brain neurons and had impaired locomotion – the flies’ ability to climb the walls of their enclosures, a common behavior, was diminished.

Some of the flies in the experiment were mutants that do not develop eyes, and even those eyeless flies displayed brain damage and locomotion impairments, suggesting flies didn’t have to see the light to be harmed by it.

“The fact that the light was accelerating aging in the flies was very surprising to us at first,” said Giebultowicz, a professor of integrative biology. “We’d measured expression of some genes in old flies, and found that stress-response, protective genes were expressed if flies were kept in light. We hypothesized that light was regulating those genes. Then we started asking, what is it in the light that is harmful to them, and we looked at the spectrum of light. It was very clear cut that although light without blue slightly shortened their lifespan, just blue light alone shortened their lifespan very dramatically.”

Natural light, Giebultowicz notes, is crucial for the body’s circadian rhythm – the 24-hour cycle of physiological processes such as brain wave activity, hormone production and cell regeneration that are important factors in feeding and sleeping patterns.

“But there is evidence suggesting that increased exposure to artificial light is a risk factor for sleep and circadian disorders,” she said. “And with the prevalent use of LED lighting and device displays, humans are subjected to increasing amounts of light in the blue spectrum since commonly used LEDs emit a high fraction of blue light. But this technology, LED lighting, even in most developed countries, has not been used long enough to know its effects across the human lifespan.”

Giebultowicz says that the flies, if given a choice, avoid blue light.

“We’re going to test if the same signaling that causes them to escape blue light is involved in longevity,” she said.

The faculty research assistant in Giebultowicz’s lab and co-first author of the study, notes that advances in technology and medicine could work together to address the damaging effects of light if this research eventually proves applicable to humans.

“Human lifespan has increased dramatically over the past century as we’ve found ways to treat diseases, and at the same time we have been spending more and more time with artificial light,” she said. “As science looks for ways to help people be healthier as they live longer, designing a healthier spectrum of light might be a possibility, not just in terms of sleeping better but in terms of overall health.”

In the meantime, there are a few things people can do to help themselves that don’t involve sitting for hours in darkness, the researchers say. Eyeglasses with amber lenses will filter out the blue light and protect your retinas. And phones, laptops and other devices can be set to block blue emissions.

“In the future, there may be phones that auto-adjust their display based on the length of usage the phone perceives,” said lead author Trevor Nash, a 2019 OSU Honors College graduate who was a first-year undergraduate when the research began. “That kind of phone might be difficult to make, but it would probably have a big impact on health.” Neuroscience Journal & Nature Website

October 2019:

DNA TESTING COMPANY SETTLES HEALTH CARE FRAUD ALLEGATIONS FOR $42.6 MILLION, BANNED FROM FEDERAL PROGRAMS FOR 25 YEARS

“Louisiana genetic testing company UTC Laboratories Inc. has agreed to pay $42.6 million in a settlement that will resolve allegations of violating the False Claims Act. According to a statement from the Department of Justice dated October 9, UTC had allegedly paid kickbacks for lab referrals and billed for testing that was not medically necessary.

UTC, also known as RenRX, agreed not to be included in any federal health care program for 25 years.

Between 2013 and 2017, UTC allegedly paid physicians to order pharmacogenetic testing, which studies how an individual is affected by drugs. The National Institute of Health says the aim of pharmacogenetics is “to develop effective, safe medications and doses that will be tailored to a person’s genetic makeup.”

In return, patients were allegedly recruited to take part in genetic testing for the Diagnosing Adverse Drug Reactions Registry(DART). The study required patients to have DNA testing done via buccal swab, which collects genetic material from the inside of a patient’s cheek.

Government allegations were that UTC Laboratories billed Medicare for these unnecessary tests and paid money, including sales commissions, to those involved with the plan.

“The payment of kickbacks in exchange for medical referrals undermines the integrity of our healthcare system,” said Assistant Attorney General Jody Hunt of the Department of Justice’s Civil Division. “Today’s settlement reflects the Department of Justice’s commitment to ensuring that taxpayer monies are well spent and not wasted on unnecessary medical testing.”

The three principals of UTC Laboratories also agreed to pay $1 million as part of the settlement.

The False Claims Act (FCA) says that anyone who is liable for attempting to receive money from the government fraudulently must be aware that the information submitted to obtain payment is false.

The settlement involves allegations in six lawsuits filed in Louisiana under the whistleblower provisions of the FCA. These provisions allow private citizens to file suit and share in any monies recovered.” Newsweek

Key to learning and forgetting identified in sleeping brain

“Distinct patterns of electrical activity in the sleeping brain may influence whether we remember or forget what we learned the previous day, according to a new study by UC San Francisco researchers. The scientists were able to influence how well rats learned a new skill by tweaking these brainwaves while animals slept, suggesting potential future applications in boosting human memory or forgetting traumatic experiences, the researchers say.

In the new study, published online October 3 in the journal Cell, a research team led by Karunesh Ganguly, MD, PhD, an associate professor of neurology and member of the UCSF Weill Institute for Neurosciences, used a technique called optogenetics to dampen specific types of brain activity in sleeping rats at will.

This allowed the researchers to determine that two distinct types of slow brain waves seen during sleep, called slow oscillations and delta waves, respectively strengthened or weakened the firing of specific brain cells involved in a newly learned skill — in this case how to operate a water spout that the rats could control with their brains via a neural implant.

“We were astonished to find that we could make learning better or worse by dampening these distinct types of brain waves during sleep,” Ganguly said. “In particular, delta waves are a big part of sleep, but they have been less studied, and nobody had ascribed a role to them. We believe these two types of slow waves compete during sleep to determine whether new information is consolidated and stored, or else forgotten.”

“Linking a specific type of brain wave to forgetting is a new concept,” Ganguly added. “More studies have been done on strengthening of memories, fewer on forgetting, and they tend to be studied in isolation from one another. What our data indicate is that there is a constant competition between the two — it’s the balance between them that determines what we remember.”

Some Sleep to Remember, Others to Forget

Over the past two decades the centuries-old human hunch that sleep plays a role in the formation of memories has been increasingly supported by scientific studies. Animal studies show that the same neurons involved in forming the initial memory of a new task or experience are reactivated during sleep to consolidate these memory traces in the brain. Many scientists believe that forgetting is also an important function of sleep — perhaps as a way of uncluttering the mind by eliminating unimportant information.

Slow oscillations and delta waves are hallmarks of so-called non-REM sleep, which — in humans, at least — makes up half or more of a night’s sleep. There is evidence that these non-REM sleep stages play a role in consolidating various kinds of memory, including the learning of motor skills. In humans, researchers have found that time spent in the early stages of non-REM sleep is associated with better learning of a simple piano riff, for instance.

Ganguly’s team began studying the role of sleep in learning as part of their ongoing efforts to develop neural implants that would allow people with paralysis to more reliably control robotic limbs with their brain. In early experiments in laboratory animals, he had noted that the biggest improvements in the animals’ ability to operate these brain-computer interfaces occurred when they slept between training sessions.

“We realized that we needed to understand how learning and forgetting occur during sleep to understand how to truly integrate artificial systems into the brain,” Ganguly said.” Neuroscience Journal

Zuckerberg promised to ‘fight’ efforts by Sen. Warren to break up Facebook in leaked comments

“Facebook chief executive Mark Zuckerberg told employees that the company was ready to “go to the mat and … fight” if Sen. Elizabeth Warren, a leading Democratic presidential candidate, follows through on her vow to try to break up major technology firms, according to a leaked recording of his remarks published Tuesday.

“If she gets elected president, then I would bet that we will have a legal challenge, and I would bet that we will win the legal challenge. And does that still suck for us? Yeah,” Zuckerberg said in a July meeting with employees, a transcript of which was published by the technology news site the Verge.

He added: “I don’t want to have a major lawsuit against our own government. I mean, that’s not the position that you want to be in when you’re, you know, I mean … it’s like, we care about our country and want to work with our government and do good things. But look, at the end of the day, if someone’s going to try to threaten something that existential, you go to the mat and you fight.”

Senator Warren (D-Mass.) soon responded over Twitter, “What would really ‘suck’ is if we don’t fix a corrupt system that lets giant companies like Facebook engage in illegal anticompetitive practices, stomp on consumer privacy rights, and repeatedly fumble their responsibility to protect our democracy.”

The unusually direct exchange between a presidential candidate and a major corporate leader underscored the heightened political stakes for Silicon Valley ahead of the 2020 election. While many technology leaders have been visibly uneasy with President Trump — who routinely attacks the industry over its alleged bias against conservatives and other issues — there also is discomfort with the calls by Warren and other Democrats to regulate technology firms more aggressively than in the past.

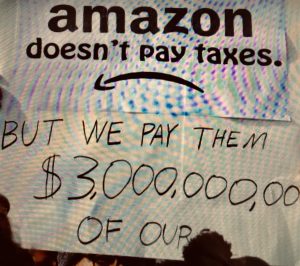

The most alarming proposal to technology leaders is the call by Warren and some other liberals to dismantle major companies such as Google, Amazon and Facebook for alleged antitrust violation Chris Hughes

, who co-founded Facebook with Zuckerberg, has been among those calling for a breakup of the company on the grounds that it has grown too powerful.

After Zuckerberg’s comments were published Tuesday, Warren took advantage of the news moment to tout her plan, “How We Can Break Up Big Tech,” linking to it in a tweet saying, “I’m not afraid to hold Big Tech companies like Facebook, Google, and Amazon accountable. It’s time to #BreakUpBigTech.”

Amazon chief executive Jeff Bezos owns The Washington Post.”

Washington Post

September 2019:

“Superagers” over 80 have the memory and brain connectivity of twenty-somethings

“Research published in the journal Cerebral Cortexhas shown that stronger functional connectivity—that is, communication among neurons in various networks of the brain—is linked to youthful memory in older adults. Those with superior memories—called superagers—have the strongest connectivity.

The work is the second in a series of three studies undertaken to unlock the secret of something researchers already knew: that some adults in their 80s and 90s function cognitively as well as or better than much younger people.

The first study showed that when compared with typical older adults, the brains of superagers are larger in certain areas that are important for processes that contribute to memory, including learning, storing, and retrieving information. But brain regions are not isolated islands; they form networks that “talk” to one another to allow for complex behaviors.

“This communication between brain regions is disrupted during normal aging,” said Alexandra Touroutoglou, Ph.D., an investigator in the MGH Department of Neurologyand the Athinoula A. Martinos Center for Biomedical Imaging. “Superagers show not just youthful brain structure, but youthful connectivity as well.”

The current study looked at superagers, typical adults from 60 to 80 years old, and young adults 18 to 35. It used functional magnetic resonance imaging (fMRI) to examine the synchronization of brain waves in the default mode network (DMN) and salience network (SN) of participants in a resting state.

“These networks ebb and flow, or oscillate, whether you’re in a resting state or engaged in a task,” said Bradford C. Dickerson, MD, director of MGH’sFrontotemporal Disorders Unit. “Our prediction was that typical older adults would have less synchronization in these brain waves—less efficient networks—but that superagers would have networks as efficient as the young adults. And that’s what we found.”

The current study looked at superagers, typical adults from 60 to 80 years old, and young adults 18 to 35. It used functional magnetic resonance imaging (fMRI) to examine the synchronization of brain waves in the default mode network (DMN) and salience network (SN) of participants in a resting state. The image is in the public domain.

The research team’s next study will analyze fMRI data from brains engaged in memory and other cognitive tasks. It is hoped that taken together, the studies will “provide basics for future researchers to develop biomarkers of successful aging,” said Touroutoglou, who is also an instructor in neurology at Harvard Medical School, noting that one of the mysteries scientists hope to tease out is whether superagers start off with “bigger and better” brain structure and communication than other people or if they are somehow more resilient to the declines of normal aging. Future research may then measure the effects of genetics as well as exercise, diet, social connectedness, and other lifestyle factors that have been shown to affect resilience in older adults.

“We hope to identify things we can prescribe for people that would help them be more like a superager,” said Dickerson, who is also an associate professor of neurology at Harvard Medical School. “It’s not as likely to be a pill as more likely to be recommendations for lifestyle, diet, and exercise. That’s one of the long-term goals of this study—to try to help people become superagers if they want to.”

Neuroscience Journal

Genes associated with left-handedness linked with shape of the brain’s language regions

“A new study has for the first time identified regions of the genome associated with left-handedness in the general population and linked their effects with brain architecture. The study linked these genetic differences with the connections between areas of the brain related to language.

It was already known that genes have a partial role in determining handedness – studies of twins have estimated that 25% of the variation in handedness can be attributed to genes – but which genes these are had not been established in the general population.

Of the four genetic regions they identified, three of these were associated with proteins involved in brain development and structure. In particular, these proteins were related to microtubules, which are part of the scaffolding inside cells, called the cytoskeleton, which guides the construction and functioning of the cells in the body.

Using detailed brain imaging from approximately 10,000 of these participants, the researchers found that these genetic effects were associated with differences in brain structure, in white matter tracts – which contain the cytoskeleton of the brain – that joins language-related regions.

We discovered that, in left-handed participants, the language areas of the left and right sides of the brain communicate with each other in a more coordinated way. This raises the intriguing possibility for future research that left-handers might have an advantage when it comes to performing verbal tasks, but it must be remembered that these differences were only seen as averages over very large numbers of people and not all left-handers will be similar.’

The researchers also found correlations between the genetic regions involved in left-handedness and a very slightly lower chance of having Parkinson’s disease, but a very slightly higher chance of having schizophrenia. However, the researchers stressed that these links only correspond to a very small difference in the actual number of people with these diseases, and are correlational so they do not show cause-and-effect. Studying the genetic links could help to improve understanding of how these serious medical conditions develop.”

Neuroscience Journal

The spy in your wallet: Credit cards have a privacy problem

![]()

“I recently used my credit card to buy a banana. Then I tried to figure out how my credit card let companies buy me.

You might think my 29-cent swipe at Target would be just between me and my bank. Heavens, no. My banana generated data that’s probably worth more than the banana itself. It ended up with marketers, Target, Amazon, Google and hedge funds, to name a few.

Oh, the places a banana will go in the sprawling card-data economy. Despite a federal privacy law covering cards, I found that six types of businesses could mine and share elements of my purchase, multiplied untold times by other companies they might have passed it to. Credit cards are a spy in your wallet — and it’s time that we add privacy, alongside rewards and rates, to how we evaluate them.

Apple, branching out from gadgets, just began offering a needed alternative. The new Apple Card’s best attribute is privacy; it restrains bank partner Goldman Sachs from selling or sharing your data with marketers. But the Apple Card, which runs on the Mastercard network, doesn’t introduce much new technology to protect you from a lot of other hands grabbing at the till.

With my banana test — two bananas, one purchased with the Amazon prime rewards visa and the other with Apple MasterCard, I hoped to uncover the secret life of my credit card data. But in this murky industry, I was only partly successful.

Instead, I asked insiders and privacy advocates to help identify the types of companies that had given themselves access to my swipe for purposes unrelated to payment and preventing fraud. “Where does it end? Nobody really knows.”

I pored over these companies’ privacy policies. Then I asked more than two dozen to get specific about what they actually do with our transactions. What data are they sharing and with whom?

Some didn’t answer. Others sent me to a Bermuda Triangle of legalese where few straight answers escaped alive. In 2019, it’s hard to trust companies that don’t think they owe us clarity about data.

What I learned: The card data business is booming for advertisers for aiding investors and for helping retailers and banks encouraging more spending. And there are many ways a card swipe can be exploited that don’t always require a transaction being “sold” or “shared” in a way that fully identifies you. Data can be aggregated, anonymized, hashed or pseudonymized (given a new name), or used to target you without ever technically changing hands.

What’s the harm? We’re legally protected from fraudulent charges and unfair lending practices. But spending patterns can reveal lots — possibly enough to blackmail you. Anytime data passes to new hands, there’s another chance it could get stolen.

And card data surely helps businesses but can put consumers at a relative disadvantage. “The more they know they know about you, the more opportunities there are for manipulation,” says a law and information professor at the University of California at Berkeley. Data can be used to model behaviors, such as figuring out exactly how many price hikes or awful experiences customers will put up with before bolting.

People can have different views on whether it’s worth exchanging data for airline miles or cash back. But how are we supposed to make informed decisions when we don’t know where our data is going?

So who all can track, mine or share your transactions? Let’s unravel the six kinds of companies that sold me out:

1) The bank

When I swiped my cards, of course my banks received data. What’s surprising is who they can share it with. My data helped identify me to Chase’s marketing partners, who send me junk mail. Some data even got fed to retail giant Amazon because it co-branded my card.

Banks have long been required to report suspicious transactions to the government. But the 1999 Gramm Leach Bliley Act also allows banks to share personally identifiable data with companies. They just have to send a privacy notice and give you the right to opt out.

When I used my Visa, Chase reserves the right to share my data for seven different kinds of reasons. The most appalling category is: “For nonaffiliates to market to you.” Who are “nonaffiliates?” Whoever the bank darned well wants. The term just means a company not owned by Chase.

Chase would not tell me the specific data it shared from my card or the companies it shared it with. Instead, spokeswoman Patricia Wexler listed kinds of data Chase doesn’t share — including “personalized transaction level data.” But that leaves room for lots of uses. Chase, for example, opts us in to receiving offers from partner companies based on our spending habits.

Co-branded card partners get a piece of the action, too. Of course Amazon receives data when you buy products on Amazon with its card. What about other purchases? Chase says it shares information with co-brand partners “at a high level only — not specific details around which merchant, and not specific items purchased,” but Wexler declined to be specific. Amazon also wouldn’t say exactly what it receives. (Amazon chief executive Jeff Bezos owns The Washington Post.)

As a co-branded partner, Apple says it can’t access data about your transactions outside Apple. The details of your purchases, visible in the Wallet app, are encrypted so Apple can’t see them.

2) The card network

This is where Apple’s advantage starts to fade. Once my banana purchase passed to card networks run by Visa and Mastercard, either might have shared them — in an anonymized form — with businesses including tourism bureaus, Google and more.

The networks, whose main business is connecting banks, have side gigs in aggregating purchases and selling them as “data insights.” Visa said it allows clients to see data on populations as small as 50 people, often tied to groups in Zip codes. Mastercard wouldn’t disclose its minimum group size.

One Mastercard program particularly irks privacy advocates; data from millions of Mastercards — now likely including Apple Cards — ends up helping Google track retail sales. Data goes into a double-blind system that lets the Web giant link ads people have seen back to purchases they’ve made in the real world. A person familiar with the matter, who wasn’t authorized to speak about it, confirmed the deal to me.

The firms wouldn’t acknowledge the specific program but emphasize Mastercard scrubs identifiable information. Mastercard spokesman Jim Issokson said, “Mastercard is not sharing any data or insights for ad measurement purposes to any of the tech giants.” Google spokeswoman Anaik von der Weid said, “We have developed advanced, privacy protective technology in this area, precisely to avoid the sharing of personal information.”

3) The store

To Target, my credit card acted as a kind of ID — each swipe helps build a “guest profile” about me. That’s useful for learning my habits, targeting me with ads on Facebook and sharing information about me with others. It made no difference whether I paid with the Chase Visa or Apple Card.

Target says it does not “sell” our data. But its privacy policy grants it the right to “share your personal information with other companies” who “may use the information we share to provide special offers and opportunities to you.”

Who are these companies: Marketers? Data brokers? Other retailers? Target spokeswoman Jenna Reck wouldn’t say.

What specifically does Target share? That “varies,” Reck said but added, “We provide aggregated, de-identified information whenever possible.”

4) Point-of-sale systems and retailer banks

The tech that helps stores track us often comes from card-swipe machines and the merchant banks that process transactions for them. Those firms gain access to your name, card number and other details and often reserve rights to share data in some form. What are they doing with it?

This is where my data trail gets particularly murky. Target wouldn’t say who its so-called acquiring bank is or what restrictions it places on it.

Square, one maker of those systems, says it does not “sell” that data. But it does pass an email or phone number we enter for receipts back to the merchant. And it shares aggregated data about purchases with organizations including trade groups.

5) Mobile wallets

I paid for my bananas with physical cards, but smartphone-payment systems introduce even more hands on transactions. Apps can access and store not only what you buy but also where you go.

Google Pay for Android stores transactions in your Google account. Google says it doesn’t allow advertisers to target you based on that data. But Google Pay’s default privacy settings which you can adjust, grant it rights to use your personal information to allow Google companies to market to you.

The Samsung Pay app has details from your past 20 transactions, though the company says the information isn’t stored on its servers. The app also delivers location-based promotions.

6) Financial apps

Many free financial services are after your data. Intuit’s Mint which lets you track all your accounts in one place, uses your data to market to you in its app. Financial software maker Yodlee sells de-identified data from customers to market research firms, retailers and investors.

Your email might also be a mole. Anytime you receive a receipt in Gmail, Google adds to it to a purchases database. Google says it doesn’t use the contents of Gmail to target ads, but that leaves open other uses.

Opting out

If you care about privacy, there are steps you can take to better protect your life as a consumer — but it’s patchwork.

Perhaps it goes without saying, but you could pay with cash. That won’t help if you swipe a loyalty card … or once businesses start using facial recognition.

I’m disappointed Apple didn’t build new kinds of privacy technology to help counteract all the other categories of companies using each swipe. With data, the devil is in the defaults- companies know a vanishingly small number of people will ever adjust settings. And how can we exercise a choice if we don’t even know what’s happening with our data?

The recent headlines about Facebook and Equifax opened a lot more eyes to how privacy affects our lives in unforeseen ways. Other businesses should take that as a warning: Data is the new corporate social responsibility.

If a company wants our trust, it’s no longer good enough to say, “We care about your privacy,” and point to some legalese. It’s time to come clean.”

Washington post

August 2019:

THE NEUROLOGIST WHO HACKED HIS BRAIN—AND ALMOST LOST HIS MIND

Researcher as Human Guinea Pig

Phil Kennedy hired a neurosurgeon in Belize to implant several electrodes in his brain and then insert a set of electronic components beneath his scalp. Back at home, Kennedy used this system to record his own brain signals in a months-long battery of experiments. His goal: Crack the neural code of human speech.

After that, Kennedy still had trouble finding words for things—he might look at a pencil and call it a pen—but his fluency improved. Once Cervantes felt his client had gotten halfway back to normal, he cleared him to go home. His early fears of having damaged Kennedy for life turned out to be unfounded; the language loss that left his patient briefly locked in was just a symptom of postoperative brain swelling. With that under control, he would be fine.

By the time Kennedy was back at his office seeing patients just a few days later, the clearest remaining indications of his Central American adventure were some lingering pronunciation problems and the sight of his shaved and bandaged head, which he sometimes hid beneath a multicolored Belizean hat. For the next several months, Kennedy stayed on anti-seizure medications as he waited for his neurons to grow inside the three cone electrodes in his skull.

Then, in October that same year, Kennedy flew back to Belize for a second surgery, this time to have a power coil and radio transceiver connected to the wires protruding from his brain. That surgery went fine, though both Powton and Cervantes were nonplussed at the components that Kennedy wanted tucked under his scalp. “I was a little surprised they were so big,” Powton says. The electronics had a clunky, retro look to them. Powton, who tinkers with drones in his spare time, was mystified that anyone would sew such an old-fangled gizmo inside his head: “I was like, ‘Haven’t you heard of microelectronics, dude?’ ”

Kennedy began the data-gathering phase of his grand self-experiment as soon as he returned home from Belize for the second time. The week before Thanksgiving, he went into his lab and balanced a magnetic power coil and receiver on his head. Then he started to record his brain activity as he said different phrases out loud and to himself—things like “I think she finds the zoo fun” and “The joy of a job makes a boy say wow”—while tapping a button to help sync his words with his neural traces, much like the way a filmmaker’s clapper board syncs picture and sound.

Over the next seven weeks, he spent most days seeing patients from 8 am until 3:30 pm and then used the evenings after work to run through his self-administered battery of tests. In his laboratory notes he is listed as Subject PK, as if to anonymize himself. His notes show that he went into the lab on Thanksgiving and on Christmas Eve.

The experiment didn’t last as long as he would have liked. The incision in his scalp never fully closed over the bulky mound of his electronics. After having had the full implant in his head for a total of just 88 days, Kennedy went back under the knife. But this time he didn’t bother going to Belize: A surgery to safeguard his health needed no approval from the FDA and would be covered by his regular insurance.

On January 13, 2015, a local surgeon opened up Kennedy’s scalp, snipped the wires coming from his brain, and removed the power coil and transceiver. He didn’t try to dig around in Kennedy’s cortex for the tips of the three glass cone electrodes that were embedded there. It was safer to leave those where they lay, enmeshed in Kennedy’s brain tissue, for the rest of his life.

Loss for Words

Yes, it’s possible to communicate directly via your brain waves. But it’s excruciatingly slow. Other substitutes for speech get the job done faster.

Kennedy’s lab sits in a leafy office park on the outskirts of Atlanta, in a yellow clapboard house. A shingle hanging out front identifies Suite B as the home of the Neural Signals Lab. When I meet Kennedy there one day in May 2015, he’s dressed in a tweed jacket and a blue-flecked tie, and his hair is neatly parted and brushed back from his forehead in a way that reveals a small depression in his left temple. “That’s when he was putting the electronics in,” Kennedy says with a slight Irish accent. “The retractor pulled on a branch of the nerve that went to my temporalis muscle. I can’t lift this eyebrow.” Indeed, I notice that the operation has left his handsome face with an asymmetric droop.

Kennedy agrees to show me the video of his first surgery in Belize, which has been saved to an old-fashioned CD-ROM. As I mentally prepare myself to see the exposed brain of the man standing next to me, Kennedy places the disc into the drive of a desktop computer running Windows 95. It responds with an awful grinding noise, like someone slowly sharpening a knife.

The disc takes a long time to load—so long that we have time to launch into a conversation about his highly unconventional research plan. “Scientists have to be individuals,” he says. “You can’t do science by committee.” As he goes on to talk about how the US too was built by individuals and not committees, the disc drive’s grunting takes on the timbre of a wagon rolling down a rocky trail: ga-chugga-chug, ga-chugga-chug. “Come on, machine!” he says, interrupting his train of thought as he clicks impatiently at some icons on the screen. “Oh for heaven’s sake, I just have inserted the disc!”

“We’ll extract our brains and connect them to computers that will do everything for us,” Kennedy says. “And the brains will live on.”

I think people overrate brain surgery as being so terribly dangerous,” he goes on. “Brain surgery is not that difficult.” Ga-chugga-chug, ga-chugga-chug, ga-chugga-chug. “If you’ve got something to do scientifically, you just have to go and do it and not listen to naysayers.”

At last a video player window opens on the PC, revealing an image of Kennedy’s skull, his scalp pulled away from it with clamps. The grunting of the disc drive is replaced by the eerie, squeaky sound of metal bit on bone. “Oh, so they’re still drilling my poor head,” he says as we watch his craniotomy begin to play out onscreen.

“Just helping ALS patients and locked-in patients is one thing, but that’s not where we stop,” Kennedy says, moving on to the big picture. “The first goal is to get the speech restored. The second goal is to restore movement, and a lot of people are working on that—that’ll happen, they just need better electrodes. And the third goal would then be to start enhancing normal humans.”

He clicks the video ahead, to another clip in which we see his brain exposed—a glistening patch of tissue with blood vessels crawling all along the top. Cervantes pokes an electrode down into Kennedy’s neural jelly and starts tugging at the wire. Every so often a blue-gloved hand pauses to dab the cortex with a Gelfoam to stanch a plume of blood.

“Your brain will be infinitely more powerful than the brains we have now,” Kennedy continues, as his brain pulsates onscreen. “We’re going to extract our brains and connect them to small computers that will do everything for us, and the brains will live on.” You’re excited for that to happen?” I ask.

“Pshaw, yeah, oh my God,” he says. “This is how we’re evolving.”

Sitting there in Kennedy’s office, staring at his old computer monitor, I’m not so sure I agree. It seems like technology always finds new and better ways to disappoint us, even as it grows more advanced every year. My smartphone can build words and sentences from my sloppy finger-swipes. But I still curse at its mistakes. (Damn you, autocorrect!) I know that, around the corner, technology far better than Kennedy’s juddering computer, his clunky electronics, and my Google Nexus 5 phone is on its way. But will people really want to entrust their brains to it?

On the screen, Cervantes jabs another wire through Kennedy’s cortex. “The surgeon is very good, actually, a very nice pair of hands,” Kennedy said when we first started watching the video. But now he deviates from our discussion about evolution to bark orders at the screen, like a sports fan in front of a TV. “No, don’t do that, don’t lift it up,” Kennedy says to the pair of hands operating on his brain. “It shouldn’t go in at that angle,” he explains to me before turning back to the computer. “Push it in more than that!” he says. “OK, that’s plenty, that’s plenty. Don’t push anymore!”

These days, invasive brain implants have been going out of style. The major funders of neural prosthesis research favor an approach that involves laying a flat grid of electrodes, 8 by 8 or 16 by 16 of them, across the naked surface of the brain. This method, called electrocorticography, or ECoG, provides a more blurred-out, impressionistic measure of activity than Kennedy’s: Instead of tuning to the voices of single neurons, it listens to a bigger chorus—or, I suppose, committee—of them, as many as hundreds of thousands of neurons at a time.

Proponents of ECoG argue that these choral traces can convey enough information for a computer to decode the brain’s intent—even what words or syllables a person means to say. Some smearing of the data might even be a boon: You don’t want to fixate on a single wonky violinist when it takes a symphony of neurons to move your vocal cords and lips and tongue. The ECoG grid can also safely stay in place under the skull for a long time, perhaps even longer than Kennedy’s cone electrodes. “We don’t really know what the limits are, but it’s definitely years or decades,” says Edward Chang, a surgeon and neurophysiologist at UC San Francisco, who has become one of the leading figures in the field and who is working on a speech prosthesis of his own.

Last summer, as Kennedy was gathering his data to present it at the 2015 meeting of the Society for Neuroscience, another lab published a new procedure for using computers and cranial implants to decode human speech. Called Brain-to-Text, it was developed at the Wadsworth Center in New York in collaboration with researchers in Germany and the Albany Medical Center, and it was tested on seven epileptic patients with implanted ECoG grids. Each subject was asked to read aloud—sections of the Gettysburg Address, the story of Humpty Dumpty, John F. Kennedy’s inaugural, and an anonymous piece of fan fiction related to the TV show Charmed—while their neural data was recorded. Then the researchers used the ECoG traces to train software for converting neural data into speech sounds and fed its output into a predictive language model—a piece of software that works a bit like the speech-to-text engine on your phone—that could guess which words were coming based on what had come before.

Kennedy is tired of the Zeno’s paradox of human progress. He has no patience for getting halfway to the future. That’s why he adamantly pushes forward.

Incredibly, the system kind of worked. The computer spat out snippets of text that bore more than a passing resemblance to Humpty Dumpty, Charmed fan fiction, and the rest. “We got a relationship,” says Gerwin Schalk, an ECoG expert and coauthor of the study. “We showed that it reconstructed spoken text much better than chance.” Earlier speech prosthesis work had shown that individual vowel sounds and consonants could be decoded from the brain; now Schalk’s group had shown that it’s possible—though difficult and error-prone—to go from brain activity to fully spoken sentences.

But even Schalk admits that this was, at best, a proof of concept. It will be a long time before anyone starts sending fully formed thoughts to a computer, he says—and even longer before anyone finds it really useful. Think about speech-recognition software, which has been around for decades, Schalk says. “It was probably 80 percent accurate in 1980 or something, and 80 percent is a pretty remarkable achievement in terms of engineering. But it’s useless in the real world,” he says. “I still don’t use Siri, because it’s not good enough.”

In the meantime, there are far simpler and more functional ways to help people who have trouble speaking. If a patient can move a finger, he can type out messages in Morse code. If a patient can move her eyes, she can use eye-tracking software on a smartphone. “These devices are dirt cheap,” Schalk says. “Now you want to replace one of these with a $100,000 brain implant and get something that’s a little better than chance?”

I try to square this idea with all the stunning cyborg demonstrations that have made their way into the media over the years—people drinking coffee with robotic arms, people getting brain implants in Belize. The future always seems so near at hand, just as it did a half century ago when José Delgado stepped into that bullring. One day soon we’ll all be brains inside computers; one day soon our thoughts and feelings will be uploaded to the Internet; one day soon our mental states will be shared and data-mined. We can already see the outlines of this scary and amazing place just on the horizon—but the closer we get, the more it seems to fall back into the distance.

Kennedy, for one, has grown tired of this Zeno’s paradox of human progress; he has no patience for always getting halfway to the future. That’s why he adamantly pushes forward: to prepare us all for the world he wrote about in 2051, the one that Delgado believed was just around the corner.

When Kennedy finally did present the data that he’d gathered from himself—first at an Emory University symposium last May and then at the Society for Neuroscience conference in October—some of his colleagues were tentatively supportive. By taking on the risk himself, by working alone and out-of-pocket, Kennedy managed to create a sui generis record of language in the brain, Chang says: “It’s a very precious set of data, whether or not it will ultimately hold

the secret for a speech prosthetic. It’s truly an extraordinary event.” Other colleagues found the story thrilling, even if they were somewhat baffled: In a field that is constantly hitting up against ethical roadblocks, this man they’d known for years, and always liked, had made a bold and unexpected bid to force brain research to its destiny. Still other scientists were simply aghast. “Some thought I was brave, some thought I was crazy,” Kennedy says.

In Georgia, I ask Kennedy if he’d ever do the experiment again. “On myself?” he says. “No. I shouldn’t do this again. I mean, certainly not on the same side.” He taps his temple, where the cone electrode tips are still lodged. Then, as if energized by the idea of putting implants on the other side of his brain, he launches into plans for making new electrodes and more sophisticated implants; for getting back the FDA’s approval for his work; for finding grants so that he can pay for everything.

“No, I shouldn’t do the other side,” he says finally. “Anyway, I don’t have the electronics for it. Ask me again when we’ve built them.” Here’s what I take from my time with Kennedy, and from his garbled answer: You can’t always plan your path into the future. Sometimes you have to build it first.” Wired

July 2019:

Now Some Families Are Hiring Coaches to Help Them Raise Phone-Free Children

Screen consultants are here to help you remember life before smartphones and tablets.

Parents around the country, are trying to turn back time to the era before smartphones. But it’s not easy to remember what exactly things were like before smartphones. So they’re hiring professionals.

A new screen-free parenting coach economy has sprung up to serve the demand. Screen consultants come into homes, schools, churches and synagogues to remind parents how people parented before.

Rhonda Moskowitz is a parenting coach in Columbus, Ohio. She has a master’s degree in K-12 learning and behavior disabilities, and over 30 years experience in schools and private practice. She barely needs any of this training now.

“I try to really meet the parents where they are, and now often it is very simple: ‘Do you have a plain old piece of material that can be used as a cape?’” said Ms. Moskowitz. “‘Great!’”

Is there a ball somewhere? Throw the ball,’” she said. “‘Kick the ball.’”

Among affluent parents, fear of phones is rampant, and it’s easy to see why. The wild look their kids have when they try to pry them off Fortnights is alarming. Most parents suspect dinnertime probably shouldn’t be spent on Instagram. The YouTube recommendation engine seems like it could make a young radical out of anyone. Now, major media outlets are telling them their children might grow smartphone-related skull horns.

No one knows what screens will make of society, good or bad. This worldwide experiment of giving everyone an exciting piece of hand-held technology is still new.

Gloria DeGaetano was a private coach working in Seattle to wean families off screens when she noticed the demand was higher than she could handle on her own. She launched the Parent Coaching Institute, a network of 500 coaches and a training program. Her coaches in small cities and rural areas charge $80 an hour. In larger cities, rates range from $125 to $250. Parents typically sign up for eight to 12 sessions.

“If you mess with Mother Nature, it messes with you,” Ms. DeGaetano said of her philosophy. “You can’t be a machine. We’re thinking like machines because we live in this mechanistic milieu. You can’t grow children optimally from principles in a mechanistic mind-set.”

Screen addiction is the top issue parents hope she can cure. Her prescriptions are often absurdly basic.

“Movement,” Ms. DeGaetano said. “Is there enough running around that will help them see their autonomy? Is there a jungle gym or a jumping rope?”

Nearby, Emily Cherkin was teaching middle school in Seattle when she noticed families around her panicked over screens and coming to her for advice. She took surveys of middle school students and teachers in the area.

“I realized I really have a market here,” she said. “There’s a need.”

She quit teaching and opened two small businesses. There’s her intervention work as the Screentime Consultant — and now there’s a co-working space attached to a play space for kids needing “Screentime-Alternative” activities. (That’s playing with blocks and painting.)

A movement reminiscent of the “virginity pledge” — a vogue in the late ’90s in which young people promised to wait until marriage to have sexual contact- is bubbling up across the country.

In this 21st-century version, a group of parents band together and make public promises to withhold smartphones from their children until eighth grade. Now there are local groups cropping up. Parents can gather for phone-free camaraderie in the Turning Life on support community.

Parents who make these pledges work to promote the idea of healthy adult phone use, and promise complete abstinence until eighth grade or even later.

Susannah Baxley’s daughter is in fifth grade.

“I have told her she can have access to social media when she goes to college,” said Ms. Baxley, who is now organizing a phone-delay pledge. So far, she has about 50 parents signed on.

Do parents need the peer pressure of promises, and coaches telling them how to parent?

“It’s not that challenging, be attentive to your phone use, notice the ways it interferes with being present,” said Erica Reischer, a psychologist and parent coach in San Francisco. “There’s this commercialization of everything that can be commercialized, including this now.”

To Dr. Reischer, the new consultant boom and screen addiction are part of the same problem.

“It’s part of the mind-set that gets us stuck on our phones in the first place — the optimization efficiency mind-set,” Dr. Reischer said. “We want answers served up to us — ‘Just tell me what to do, and I’ll do it.’”

But what seems self-evident can be hard to remember, and hard to stick with.

“Yes, it’s just hearing something that’s so blatantly obvious, but I couldn’t see it,” said Julie Wasserstrom, a 43-year-old mother of two in Bexley, Ohio.

She hired Ms. Moskowitz and found the advice useful.

“She just said things like, ‘Are you telling your kids, ‘No screens at the table — but your phone is on your lap?’” Ms. Wasserstrom said. “When we were growing up, we didn’t have these, so our parents couldn’t role model appropriate behaviors to us, and we have to learn what is appropriate so we can role model that for them.”

Ms. Wasserstrom compared screens to a knife or a hot stove.

“You won’t send your kid into the kitchen with a hot stove without giving them instructions or just hand them a knife,” Ms. Wasserstrom said. “You have to be a role model on safe ways to use a knife.” NY Times

Adding a Microchip to Your Brain?

You might risk losing yourself

“As artificial intelligence creates large-scale unemployment, some professionals are attempting to maintain intellectual parity by adding microchips to their brains. Even aside from career worries, it’s not difficult to understand the appeal of merging with A.I. After all, if enhancement leads to superintelligence and extreme longevity, isn’t it better than the alternative — the inevitable degeneration of the brain and body?

At the Center for Mind Design in Manhattan, customers will soon be able to choose from a variety of brain enhancements: Human Calculator promises to give you savant-level mathematical abilities; Zen Garden can make you calmer and more efficient. It is also rumored that if clinical trials go as planned, customers will soon be able to purchase an enhancement bundle called Merge — a series of enhancements allowing customers to gradually augment and transfer all of their mental functions to the cloud over a period of five years.

Unfortunately, these brain chips may fail to do their job for two philosophical reasons. The first involves the nature of consciousness. Notice that as you read this, it feels like something to beyou — you are having conscious experience. You are feeling bodily sensations, hearing background noise, seeing the words on the page. Without consciousness, experience itself simply wouldn’t exist.

Many philosophers view the nature of consciousness as a mystery. They believe that we don’t fully understand why all the information processing in the brain feels like something. They also believe that we still don’t understand whether consciousness is unique to our biological substrate, or if other substrates — like silicon or graphene microchips — are also capable of generating conscious experiences.

For the sake of argument, let’s assume microchips are the wrong substrate for consciousness. In this case, if you replaced one or more parts of your brain with microchips, you would diminish or end your life as a conscious being. If this is true, then consciousness, as glorious as it is, may be the very thing that limits our intelligence augmentation. If microchips are the wrong stuff, then A.I.s themselves wouldn’t have this design ceiling on intelligence augmentation — but they would be incapable of consciousness.

You might object, saying that we can still enhance parts of the brain notresponsible for consciousness. It is true that much of what the brain does is nonconscious computation, but neuroscientists suspect that our working memory and attentional systems are part of the neural basis of consciousness. These systems are notoriously slow, processing only about four manageable chunks of information at a time. If replacing parts of these systems with A.I. components produces a loss of consciousness, we may be stuck with our pre-existing bandwidth limitations. This may amount to a massive bottleneck on the brain’s capacity to attend to and synthesize data piping in through chips used in areas of the brain that are not responsible for consciousness.

But let’s suppose that microchips turn out to be the right stuff. There is still a second problem, one that involves the nature of the self. Imagine that, longing for superintelligence, you consider buying Merge. To understand whether you should embark upon this journey, you must first understand what and who you are. But what is a self or person? What allows a self to continue existing over time? Like consciousness, the nature of the self is a matter of intense philosophical controversy. And given your conception of a self or person, would youcontinue to exist after adding Merge — or would you have ceased to exist, having been replaced by someone else? If the latter, why try Merge in the first place?

Even if your hypothetical merger with A.I. brings benefits like superhuman intelligence and radical life extension, it must not involve the elimination of any of what philosophers call “essential properties” — the things that make you you. Even if you would like to become superintelligent, knowingly trading away one or more of your essential properties would be tantamount to suicide — that is, to your intentionally causing yourself to cease to exist. So before you attempt to redesign your mind, you’d better know what your essential properties are.